Explore a generative artificial intelligence application that converts images into speech using Amazon SageMaker and Hugging Face

February 6, 2023

Sight loss comes in many forms. For some, it occurs from birth; for others, it is slowly deteriorating over time, with multiple 'expiration dates': the day you can't see photos, recognize yourself or the faces of loved ones, or even read your mail. In a previous article, Enable the Visually Impaired to Hear Documents using Amazon Textract and Amazon Polly, the authors presented a Text to Speech app called 'Read for Me.' Its accessibility has come a long way, but what about images?

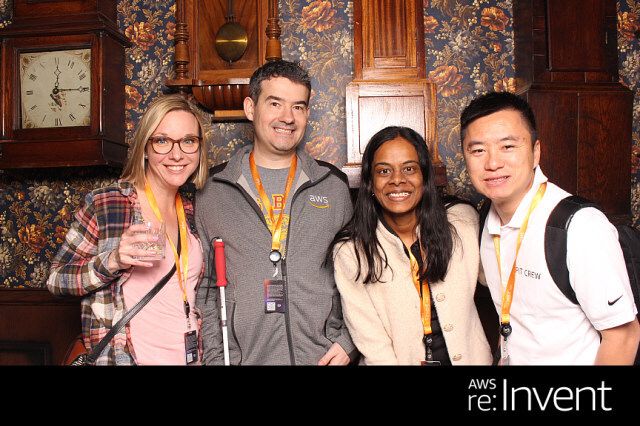

At the AWS re: Invent 2022 conference in Las Vegas, the authors presented 'Describe for Me' at the AWS Builders' Fair. A website that helps visually impaired people understand images using captions, facial recognition and text-to-speech conversion, a technology we call 'From Image to Speech'. Using several AI/ML services, 'Describe for me' generates an input image caption and reads it in a clear, natural-sounding voice in various languages and dialects.

In this article, the authors will explain the solution architecture behind 'Describe For Me' and the design considerations of the solution.

Overview of the solution

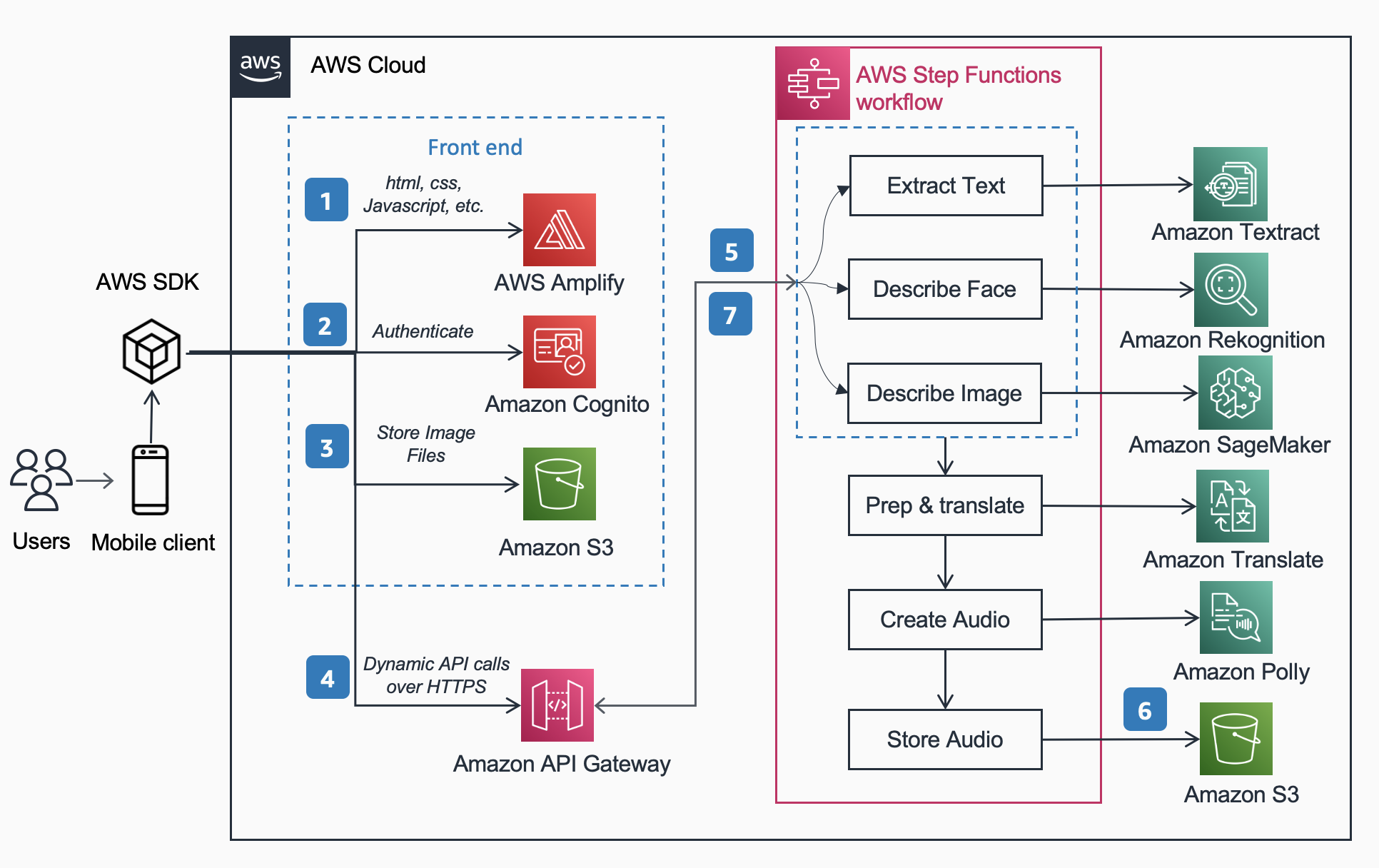

The reference architecture below shows the workflow of a user taking a photo with a phone and playing an MP3 file with the image caption.

The workflow includes the following steps:

- AWS Amplify distributes the DescribeForMe web app, which consists of HTML, JavaScript, and CSS, to end users' mobile devices.

- The Amazon Cognito identity pool provides temporary access to the Amazon S3 tray.

- The user uploads an image file to the Amazon S3 tray using the AWS SDK via the web app.

- The DescribeForMe web application invokes the back-end artificial intelligence services, sending the Amazon S3 object key in the payload to the Amazon API Gateway.

- Amazon API Gateway creates an instance of the AWS Step Functions workflow. State Machine coordinates the artificial intelligence/machine learning (AI/ML) services Amazon Rekognition, Amazon SageMaker, Amazon Textract, Amazon Translate, and Amazon Polly using AWS lambda functions.

- The AWS Step Functions workflow creates an audio file as output and stores it in Amazon S3 in MP3 format.

- A pre-signed URL with the location of the audio file stored in Amazon S3 is sent back to the user's browser via the Amazon API Gateway. The user's mobile device plays the audio file using the pre-signed URL.

Solution Guide

In this section, the authors will focus on design considerations:

- Parallel processing in AWS Step Functions workflows

- A unified, pre-trained OFA (One For All) machine learning model from Hugging Face to Amazon SageMaker for image signatures

- Amazon Rekognition for face recognition

For a more detailed overview of why serverless architecture, synchronous workflows, step function workflows, headless architecture, and the benefits gained, read the previous blog article Enable the Visually Impaired to Hear Documents using Amazon Textract and Amazon Polly.

Parallel processing

Using parallel processing in the Step Functions workflow has reduced computation time by up to 48%. When a user uploads an image to an S3 segment, the Amazon API Gateway creates an AWS Step Functions workflow instance. The following three Lambda functions then process the image in parallel.

- The first Lambda function, describe_image, analyses the image using the OFA_IMAGE_CAPTION model hosted on the SageMaker endpoint in real-time to provide an image signature.

- The second Lambda function, describe_faces, first checks if faces exist using Amazon Rekognition's Detect Faces API and, if valid, calls the Compare Faces API. This is because Compare Faces faces will report an error if no faces are found in the image. In addition, calling Detect Faces first is faster than simply running Compare Faces and error handling so that the processing time will be quicker for pictures without faces.

- A third Lambda function called extract_text handles text-to-speech conversion using Amazon Texttract and Amazon Comprehend.

Executing the Lambda functions one at a time is adequate, but a faster and more efficient way is parallel processing. The table below shows the calculation times recorded for three example images.

| Image | People | Sequential Time | Parallel Time | Time Savings (%) | Caption |

|

0 | 1869ms | 1702ms | 8% | A tabby cat curled up in a fluffy white bed. |

|

1 | 4277ms | 2197ms | 48% | A woman in a green blouse and black cardigan smiles at the camera. I recognize one person: Kanbo. |

|

4 | 6603ms | 3904ms | 40% | People standing in front of the Amazon Spheres. I recognize 3 people: Kanbo, Jack, and Ayman. |

Hugging Face is an open-source community and data science platform that allows users to share, create, train, and deploy machine learning models. After reviewing the models available on Hugging Face, the authors decided on the OFA model because, as its developers described, it is "a task- and modality-independent framework that supports task versatility."

OFA is a step towards 'One for All' as it is a unified, multimodal, pre-trained model that can effectively move to a range of downstream tasks. While the OFA model handles some tasks, including visual basics, language understanding, and image generation, we used the OFA model for image captions in the Describe for Me project to make some of the applications from image to text. Check out the official OFA repository (ICML 2022) to learn about OFA's unified architectures, tasks, and modalities using a simple learning structure called sequence-sequence.

To integrate OFA into the authoring application, the developers cloned the repository from Hugging Face and moved the model into a container to deploy to the SageMaker endpoint. The notebook in this repository is an excellent guide to implementing a large OFA model in Jupyter Notebook in SageMaker. Once the inference script is containerized, the model will be deployed behind the SageMaker endpoint, as described in the SageMaker documentation. Once the model is deployed, create an HTTPS endpoint that can be integrated with the Lambda function 'describe_image,' which analyses the image to create an image signature—implemented a tiny OFA model as it is a smaller model and can be implemented in less time, achieving similar performance.

Examples of the translation of image content into speech generated by 'Describe for me' are presented below:

Facial recognition

Amazon Rekognition Image provides a DetectFaces operation that searches for key facial features, such as eyes, nose, and mouth, to detect faces in the input image. The developers use this functionality in the solution to detect people in the input image. If a person is detected, they use the CompareFaces operation to compare the face in the input image with the faces that Describe for Me has been trained with and describe the person by name. They chose Rekognition for face detection because of its high accuracy and the simplicity of integrating it with a proprietary application with ready-to-use capabilities.

Potential use cases

Alternate Text Generation for web images

All images on a web page must have alternative text so that screen readers can pass them to visually impaired people. It is also suitable for search engine optimization (SEO). Creating alternative captions can be time-consuming, as it is the copywriter's job to provide them in the design document. The Describe For Me API can automatically generate alternative text for images. It can also be used as a browser plug-in to automatically add captions to images lacking alternative text on any web page.

Audio Description for Video

Audio description provides a narration track for video content to help visually impaired people follow the videos. As image captions become more reliable and accurate, a workflow to create an audio track based on descriptions of key parts of a scene may be possible. Amazon Rekognition can detect scene changes, logos, and caption sequences and recognize celebrities. A future version of the description would automate this critical function for film and video.

Conclusions

In this article, the authors discussed using AWS services, including artificial intelligence and serverless services, to help visually impaired people see images. You can learn more about and use the Describe For Me project by visiting describeforme.com. You can also learn more about the unique features of Amazon SageMaker, Amazon Rekognition, and AWS' partnership with Hugging Face.

Third-Party ML Model Disclaimer for Guidance

This guidance is for informational purposes only. You should continue to conduct your independent assessment and take measures to ensure that you comply with your quality control practices and standards, as well as applicable rules, laws, regulations, licenses, and terms of use that apply to you, your content, and the third-party machine learning model referenced in this guidance. AWS has no control or authority over the third-party machine-learning model referenced in this guidance and makes no representations or warranties that the third-party machine-learning model is secure, virus-free, operational, or compatible with your production environment and standards. AWS makes no representations, warranties, or guarantees that any information in this guidance will lead to a particular outcome or result.