Large-scale data migration and cost savings with Amazon S3 File Gateway

Migrating data to the cloud requires experience with different types of data and the ability to maintain the source data structure and metadata attributes. Customers often store data in local files on traditional file servers, retaining the original timestamp of data creation for various reasons, including data lifecycle management. At the same time, customers struggle to define a data migration path to the cloud that supports preserving the source data structure, metadata, and hybrid implementation. This ultimately prevents customers from achieving the full range of benefits of cloud storage, including cost, performance, and scale.

Customers use Amazon S3 File Gateway and Amazon Simple Storage Service (S3) to migrate local data to the cloud and store it properly. Once the data is in Amazon S3 storage, customers use S3 Lifecycle Policy as a scalable solution to automate object tiering across different classes of Amazon S3 storage based on the 'Creation Date' object, helping customers optimize storage costs at scale. When a customer uploads objects to Amazon S3 using the S3 File Gateway, it updates the 'Modification Date' of the file from the source as a 'Creation Date' object in S3 and saves it. With the new 'Creation Date' in S3, the age of the objects in Amazon S3 is reset, preventing Lifecycle Policy from using the original 'Creation Date' from the source server to move the objects to a lower storage class. This presents a challenge for customers who have many older files locally and use the S3 File Gateway to migrate and upload to the

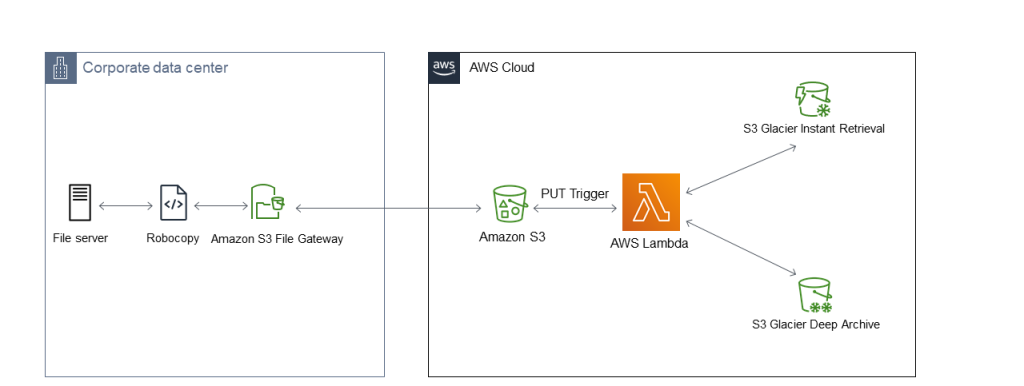

Solution architecture

This architecture illustrates migrating and uploading files with original metadata from a local file system to the cloud. As shown, you copy the source data using Robocopy and then save it to the S3 File Gateway file share. The source file contains metadata fields such as 'Date last modified' and 'Date created'. From then on, this source metadata information is called the original stat() time in the document by the authors. The solution then automates the data migration using the S3 File Gateway and stores the data in an S3 tray with the selected storage class based on the original stat() function.

For each object stored in the S3 tray by the 'Amazon S3 File Gateway', Amazon S3 stores a set of user-defined object metadata to preserve the source files' original stat() time. The authors use a Lambda function to read the original stat() time from the user-defined metadata fields to call the API instance and transfer the objects to the appropriate S3 storage class. In this example, the Glacier Instant Retrieval (GIR) and Glacier Deep Archive (GDA) S3 storage classes are used for demonstration purposes.

This architecture diagram shows two different use cases. The first use case involves migrating data from a local file server to an Amazon S3 storage tray using the Amazon S3 File Gateway. The second use case involves granting access to objects stored in Amazon S3 via the Amazon S3 File Gateway file interface from a local location.

Services included

The solution consists of the following primary services. First, the authors will provide their context. They will then explain how these services form the overall solution.

Robocopy

Robocopy allows local file metadata to be copied when copying files from a Microsoft Windows file server. There are many methods for preserving file metadata for objects (files) migrated to Amazon S3. You will learn how to run the Robocopy command to demonstrate how to synchronize the file time signature and permission metadata from the source to the S3 File Gateway file share.

Amazon S3 File Gateway

S3 File Gateway is an AWS service that allows local file data to be migrated to Amazon Simple Storage Service (S3) storage on AWS. Customers use Amazon S3 File Gateway to store and retrieve Server Message Block (SMB) and Network File Share (NFS) data files as objects in Amazon S3 storage. Once the objects are uploaded to the S3 storage, customers can use the many features available. Some of these features include encryption, version storage, and lifecycle management.

AWS Lambda

AWS Lambda is a serverless, event-driven computing service that allows code to run without sharing or managing servers. In this solution, AWS Lambda helps automate the transfer of files to the appropriate Amazon S3 storage class using deep integration with Amazon S3.

Overview of the solution

The original data resides on local NAS storage with SMB or NFS access. For this post, you and the authors will look at the solution workflow. Together with them, you will trace the following:

- Using Robocopy to copy data and preserve the original

- Configuring Amazon S3 File Gateway and Amazon S3 storage.

- Using AWS Lambda to automate S3 lifecycle policies.

Using Robocopy to copy data and preserve the original

When copying data using the Robocopy tool to S3 File Gateway, care should be taken to use appropriate metadata preservation techniques. This will preserve the original file timestamp when copying data from local NAS systems to S3 File Gateway and Amazon S3. When making the copy, you need to specify whether you want to copy the file's metadata. It would be best to use the appropriate options in the Robocopy tool to copy the metadata using Amazon S3 File Gateway. You can find more information in the S3 metadata documentation.

Here is an example showing how Robocopy works to preserve the source file metadata, which is ultimately stored as user metadata in an S3 object using the S3 File Gateway.

Where:

- /TIMEFIX = sync timestamp

- /E copies subfolders, including empty folders

- /SECFIX copies NTFS permissions

- /V for full logging

Here is an example of how to copy only permissions and ACL information:

C:³> robocopy <source> <destination> /e /sec /secfix /COPY:ATSOU

Robocopy has a preview or trial run (/L) option that you can use to see what updates it will make before operating. It is worth noting that using Robocopy can be slow as it depends on the number of files in each prefix, the speed of the client workstation's main drive, and whether Amazon S3 versioning is enabled and has deletion tags. Refer to the Robocopy documentation for details.

Configuring Amazon S3 File Gateway and Amazon S3 storage

In your local environment, you can install Amazon S3 File Gateway as a virtual machine. It supports VMware, Microsoft Hyper-V and Linux KVM or is a dedicated hardware appliance. When Robocopy copies files from the source server to a file share in the S3 File Gateway, it uploads the files to S3 storage as data objects in AWS. The File Gateway serves as a cache for files that users need to access frequently. Inaccessible or rarely used files are retrieved from the Amazon S3 storage just through the file gateway.

When files are copied to a file share, the data is downloaded to the Amazon S3 service as objects. The original metadata of these files is retained/stored in the 'user metadata' of the S3 objects. While each Amazon S3 object will have a new creation time according to S3, you are interested in the original 'mtime' parameter stored in the 'user metadata'.

After the initial migration of data to S3 storage, a number of S3 features can be implemented to further optimise costs and improve the data's security. Follow these links to the documentation for a more in-depth look at the capabilities of File Gateway and Amazon S3.

- Amazon S3 File Gateway

- Amazon S3

- Amazon S3 Storage Classes

- Protecting data using encryption in Amazon S3

- Amazon S3 Lifecycle Management Policies

Using AWS Lambda to automate S3 lifecycle policies.

Using the Amazon S3 File Gateway, any object stored in Amazon S3 with a PUT REST call triggers an AWS Lambda function. This AWS Lambda function reads the original file timestamps from the 'user metadata' of the S3 objects. The function calculates the 'mtime' parameter (stored in Unix time format) and applies a rule to know if the data is eligible for allocation to another Amazon S3 storage class. If the data is eligible to be moved according to the defined rules, the function performs an action to move the data if necessary. Here you can define your thresholds for the S3 lifecycle rules to split objects into different storage classes.

Take a look at the example code below, which you can use to build a solution. Feel free to tweak and modify it as necessary to meet your requirements.

import boto3

import json

import math

import numpy as np

bucket='demo-storagegateway'

s3 = boto3.client('s3')

from urllib.parse import unquote_plus

def lambda_handler(event, context):

sourcekey = event['Records'][0]['s3']['object']['key']

print ('sourcekey', sourcekey, unquote_plus(sourcekey))

response = s3.head_object(Bucket=bucket, Key=unquote_plus(sourcekey))

metadatareturn = response['Metadata']['file-mtime']

OriginalTime = np.timedelta64(metadatareturn[:-2].ljust(9, '0'),'ns')

print('metadata', metadatareturnint)

if (OriginalTime + np.timedelta64(3650, 'D')) > np.timedelta64(365, 'D'):

print("made it to file_key_name")

file_key_name = unquote_plus(sourcekey)

print(file_key_name)

print("made it to copy_source_object")

copy_source_object = {'Bucket': bucket, 'Key': 'file_key_name'}

print(copy_source_object)

Testcopy=s3.copy_object(CopySource=copy_source_object, Bucket=bucket, Key=file_key_name, StorageClass='GLACIER') print("Testcopy", Testcopy)

Summary

Using this article, the authors have discussed how to preserve the original file timestamps during the initial migration phase of moving data from the local file server to the Amazon S3 service via the S3 file gateway. You also learnt how to use AWS Lambda's deep integration with Amazon S3 to move your files to cheaper storage based on a specific date defined in your code. The advantage of this approach is that no data will be missed, and you can migrate and preserve all metadata in a cost-effective way. This solution not only serves the data migration path, but also opens the door to your journey to the cloud.

Using AWS Storage Gateway, Amazon S3 and AWS Lambda, you can migrate your data to persistent, scalable and highly available cloud storage. You can take advantage of hybrid cloud storage by accessing your data locally without making any changes to your existing environment. This enables you to implement cloud native features to simplify data management, optimise the value of your data and achieve cost savings at scale.