Maximising price performance for big data workloads using Amazon EBS

Since the advent of big data more than a decade ago, Hadoop - an open-source platform for efficiently storing and processing large data sets - has played a vital role in storing, analyzing, and reducing this data to provide value to businesses. Hadoop enables structured, semi-structured, or unstructured data of any type to be stored in storage clusters in a distributed manner using the Hadoop Distributed File System (HDFS) and parallel processing of data stores in HDFS using MapReduce. In use cases where you are hosting a Hadoop cluster on AWS, such as running Amazon EMR or an Apache HBase cluster, block storage intermediate processing is required for optimal performance while balancing costs.

In this article, the authors provide an overview of AWS' block storage service, Amazon Elastic Block Store (Amazon EBS), and recommend the EBS st1 volume type best suited for large data workloads. They then discuss the advantages of st1 volumes and the optimal st1 configuration that maximizes the price and performance of the HDFS file system. They set up a test environment that compares three clusters with a different st1 volume configuration. Using Amazon CloudWatch metrics, it is possible to monitor the performance of the st1 volumes connected to these three clusters and then determine which cluster with the proper volume configuration provides optimal performance. With these observations, the st1 volumes can be optimized using the verified configuration to balance cost performance for big data workloads.

Amazon Elastic Block Store

Amazon Elastic Block Store (Amazon EBS) is an easy-to-use, scalable, and efficient block storage service designed for Amazon Elastic Cloud Compute (Amazon EC2). Amazon EBS provides multiple volume types supported by solid-state drives (SSDs) or hard disk drives (HDDs). These volume types vary in performance characteristics and price, allowing you to tailor storage performance and cost to your application needs. SSD-based volumes (io2 Block Express, io2, io1, gp3, and gp2) are optimized for transactional workloads involving frequent read and write operations with small input/output (I/O) sizes, where the dominant performance attribute is I/O O operations per second (IOPS). HDD-based volumes (st1 and sc1) are optimized for high streaming workloads where throughput is the dominant performance attribute. All EBS volumes perform well as storage for file systems, databases, or any application that requires access to raw, unformatted block-level storage and supports dynamic configuration changes.

As customers continually seek to optimize their cloud spending, it is also essential to identify the right Amazon EBS through storage volume type and the optimal volume configuration to meet the performance requirements of workloads while reducing costs. The Amazon EBS Throughput Optimised HDD (st1) volume type provides a fraction of the cost of SSD-based volume types and can accelerate up to 250 MiB/s per TiB with a maximum of 500 MiB/s per volume and a base throughput of 40 MiB/s per TiB. Due to its performance attributes, st1 is well suited for large, sequential workloads such as Amazon EMR, ETL (extract, transform, load), data warehouses, and log processing.

HDFS and Amazon EBS st1 volumes

HDFS consists of NameNodes and DataNodes. As the DataNodes run on hardware using raw disks, any nodes' failure probability is high. HDFS can replicate data across several nodes depending on the replication factor. HDFS has a default replication factor of 3, meaning data is replicated thrice across DataNodes.

In HDFS, each file is divided into data blocks with a default size of 128 MB, and the I/O operations are sequential. These data blocks are then stored in DataNodes, distributed, and replicated across nodes. Due to the nature of large block sizes and sequential I/O, st1 volumes are a good fit as they provide high continuous sequential I/O throughput at a lower cost than other EBS volume types.

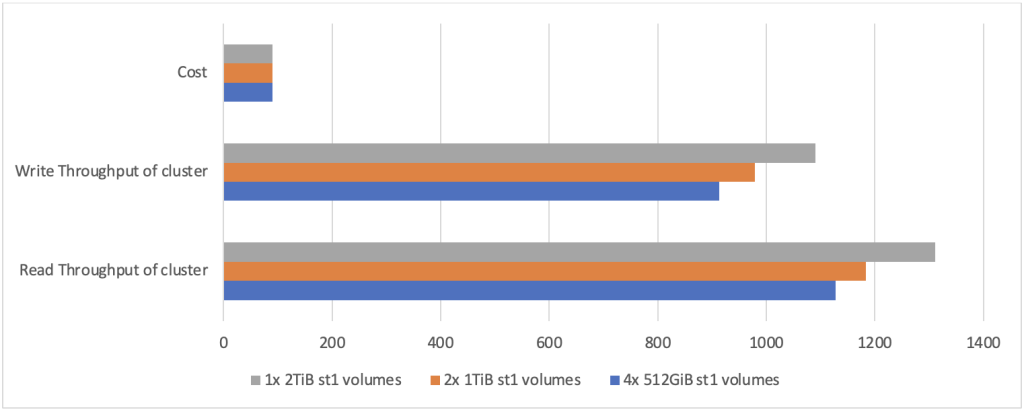

The developers used the TestDFSIO benchmark test to simulate a read-and-write test. Below, you will find the test results in three cluster sizes. They used R5.xlarge (4 vCPUs and 32 GiB of memory) as the EC2 instance type, with a cluster configuration of 1 NameNode and 5 DataNodes. In the first two columns of the table below, you can see that each node has a different st1 volume configuration with a total cluster size of 10 TiB. The test configuration includes 400 files of 4 GB each.

| St1 volumes* attached to each DataNode | Size of storage of entire cluster | Test execution time for reads (seconds) | Test execution time for writes (seconds) | Cluster read throughput (MiB/s) |

Cluster write throughput (MiB/s) |

Cost/month |

|---|---|---|---|---|---|---|

|

4 Volumes of |

4*512MiB*5 = 10 TiB |

1452 | 1794 | 1128.37 | 913.26 | $90 |

|

2 Volumes of |

2*1 TiB * 5 |

1384 |

1673 |

1183.81 |

979.31 |

$90 |

| 1 Volume of 2TiB each |

1*2 TiB * 5 = 10TiB |

1249 | 1503 | 1311.76 | 1090.08 |

$90 |

Note that as the size of a single EBS st1 volume increases, it can begin to support longer sustained throughput. However, above 2 TiB, as burst throughput is currently 500 MB/s, the benefits of using burst performance will persist. However, it can maintain at least a constant base throughput of 40 MiB/s per TiB up to 12.5 TiB. Once the volume size reaches 12.5 TiB, the essential throughput equals that volume's 500 MiB/s series throughput. Therefore, performance beyond this volume size remains the same.

Checking performance with Amazon CloudWatch metrics

AWS provides a monitoring tool in the form of Amazon CloudWatch metrics, which allow you to monitor Amazon EBS performance metrics and contextualize application behavior. You can find these metrics by logging into AWS Management Console, searching for CloudWatch in the search bar, and finally going to All Metrics > EBS.

To calculate the read and write throughput of an EBS volume, use CloudWatch's custom maths as follows:

- Read Throughput = SUM(VolumeReadBytes)/ Period (in seconds)

- Write Throughput = SUM(VolumeWriteBytes)/ Period (in seconds)

In the following section, the authors compare the throughput performance of st1 volumes in three configurations to determine which configuration provides the best price performance.

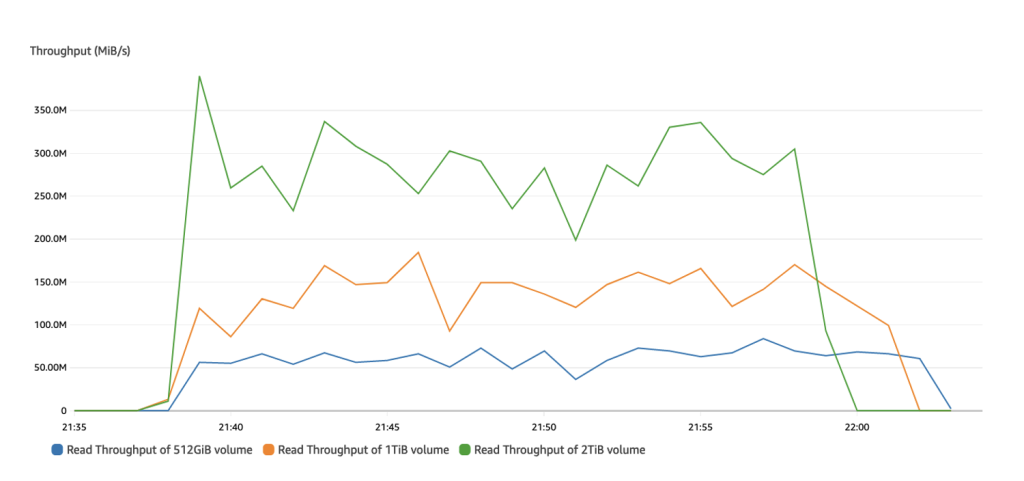

Read throughput of st1 volumes in three configurations

Looking at read throughput, a 512 GiB volume can peak at ~ 75 MiB/s, a 1-TiB volume can peak at ~ 180 MiB/s and a 2-TiB volume can peak at 375 MiB /s read throughput.

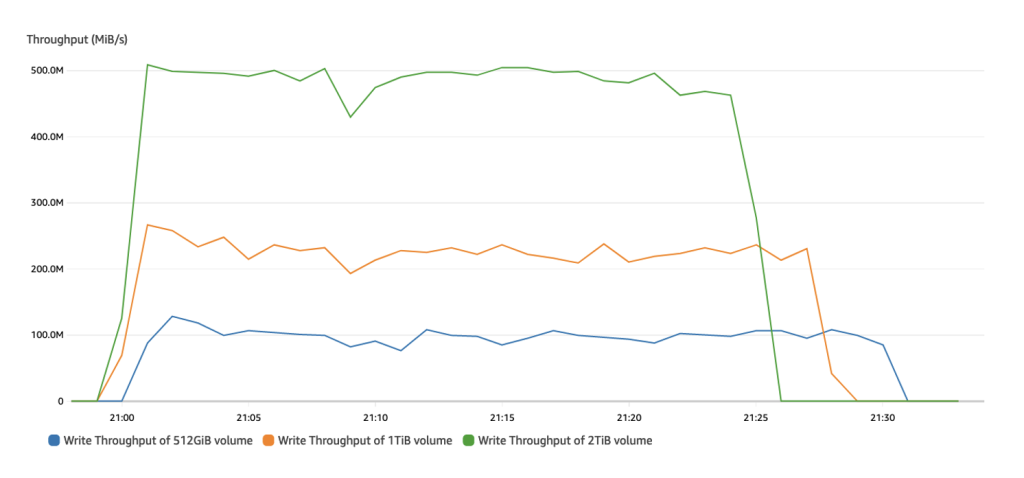

Write throughput of st1 volumes in three configurations

Next, look at the write throughput. A 512-GiB volume can peak at ~125 MiB/s, a 1-TiB volume can peak at ~250 MiB/s, and a 2-TiB volume can peak at 500MiB/s write throughput.

Based on the observations from the CloudWatch read/write throughput charts above, it can be concluded that larger st1 volumes can increase performance. This is because the performance of the st1 volume is directly proportional to the configured storage at 40 MiB/s per TiB. This is also consistent with the data you observe from the table above, where cluster throughput increases as the size of the individual st1 volumes in that cluster increases.

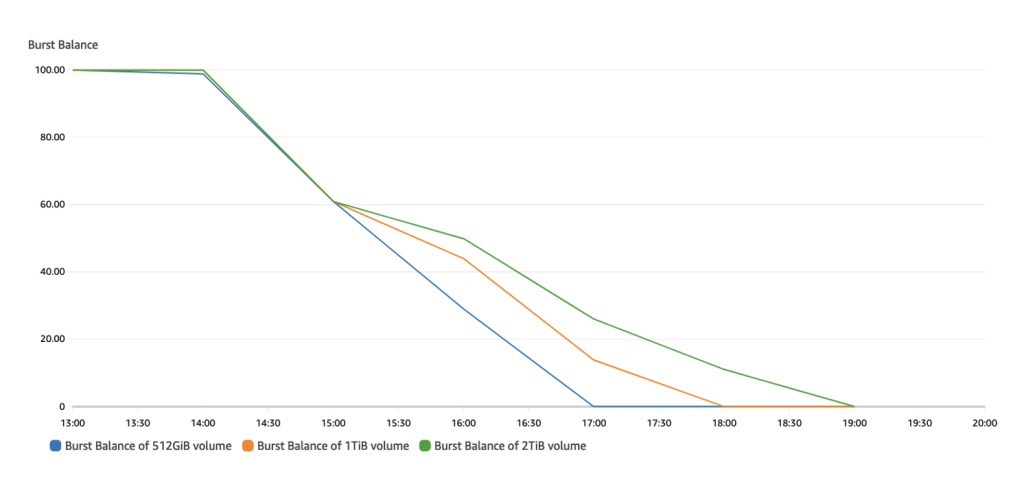

The balance of the st1 volumes in the three configurations

The batch balance tells us the remaining bandwidth credits of the st1 volumes in the batch tray, allowing us to increase the volumes' capacity above their base capacity. It also indicates how quickly the st1 volumes deplete their bandwidth credits over time. The graph below shows that the 512-GiB volume was the first to drop to 0, followed by the 1-TiB volume and then the 2-TiB volume. This indicates that larger st1 volumes can perform better than smaller st1 volumes.

Conclusions

In this article, the authors configured three Amazon EMR clusters, each with a different st1 volume configuration in a test environment. They compared their performance results when running the HDFS file system. From the CloudWatch metrics, it can be deduced that using larger st1 volumes in an Amazon EMR cluster with HDFS can achieve higher throughput at the exact cost of multiple smaller st1 volumes, thus optimizing price performance. Amazon EBS allows customers to choose a storage solution that meets and scales with their HDFS workload requirements. St1 is available for all AWS regions, and you can access the AWS management console to launch your first st1 volume or convert existing volumes by following this guide.