Serverless applications can lower the total cost of ownership (TCO) compared to a server-based cloud execution model because it effectively shifts operational responsibilities, such as server management, to the cloud provider. Deloitte's research on serverless total cost of ownership, conducted with Fortune 100 clients across various industries, shows that serverless applications can offer up to 57% cost savings compared to server-based solutions.

Serverless applications can offer lower costs in:

- Initial development: serverless allows developers greater flexibility, faster feature delivery, and a focus on differentiated business logic.

- Ongoing maintenance and infrastructure: serverless shifts the operational burden to AWS. Ongoing maintenance, including installing patches and operating system updates.

This article focuses on the options available to reduce the direct costs of AWS when developing serverless applications. AWS Lambda is often the compute layer in these workloads and can be a significant part of the total cost.

To help optimize Lambda costs, this article discusses some of the most common cost optimization techniques, focusing on configuration changes rather than code updates. It is intended for architects and developers who are new to serverless development.

Serverless development facilitates experimentation and iterative improvement. All of the techniques described here can be applied before the application is developed or after it is deployed in a production environment. The techniques are roughly grouped by applicability: the first can apply to any workload, and the last applies to fewer workloads.

Proper adjustment of the Lambda function

Lambda uses a pay-per-use cost model that is based on three metrics:

- Memory configuration: allocated memory from 128 MB to 10,240 MB. CPU and other resources available to the function are allocated proportionally to memory.

- Function duration: the duration of the function, measured in milliseconds.

- Number of calls: the number of times the function runs.

Excessive memory allocation is one of the main drivers of Lambda cost increases. This is particularly acute among builders unfamiliar with Lambda, who are used to sharing hosts running multiple processes. Lambda scales so that each function execution environment handles only one request at a time. Each execution environment can access fixed resources (memory, CPU, storage) to complete work on the request.

With the proper memory configuration, the function has the resources to do its job, and you only pay for the resources required. Although you also have direct control throughout the function, this is a less efficient cost optimization to implement. The engineering costs associated with achieving a few milliseconds of savings can outweigh the savings. Depending on the load, the number of function calls may be beyond your control. The following section discusses a technique to reduce the number of calls for certain types of workloads.

Access the memory configuration via the AWS management console or your favorite infrastructure, such as Code (IaC). The memory configuration setting defines the memory allocated, not the memory used by your function. Adjusting the memory size is an adjustment that can reduce the cost (or increase the performance) of a function. However, reducing a function's memory may not always result in savings. Reducing a function's memory means reducing the available CPU for the Lambda function, which can increase the duration of the function, resulting in either no savings or higher costs. Determining the optimal memory configuration to reduce costs while maintaining performance is essential.

AWS offers two approaches to proper memory allocation: AWS Lambda Power Tuning and AWS Compute Optimizer.

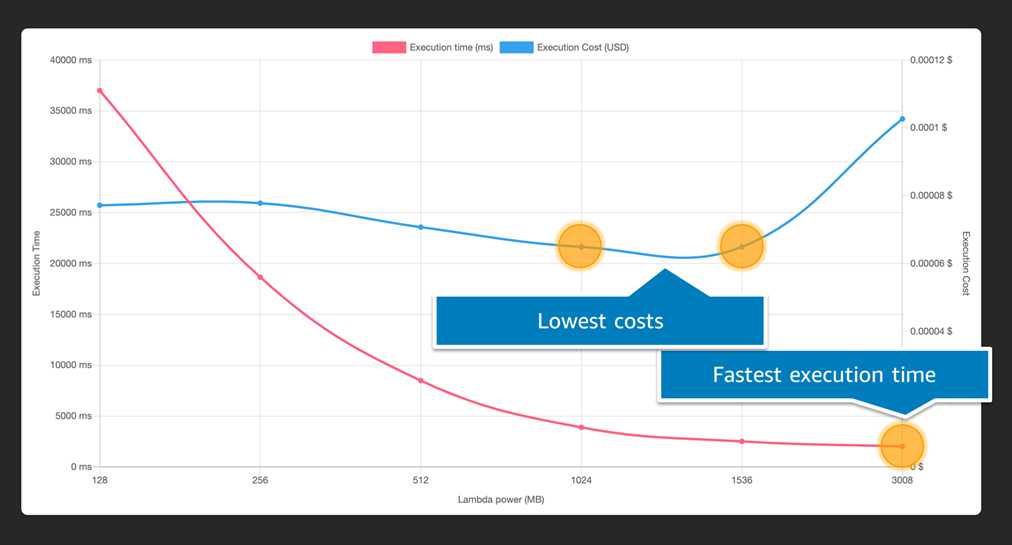

AWS Lambda Power Tuning is an open-source tool that you can use to empirically find the optimal memory configuration for your function, comparing cost with execution time. The tool runs multiple concurrent versions of your function with fake input at different memory allocations. The result is a graph that can help you find the 'best point' between cost and runtime/performance. Depending on your workload, you can prioritize either of these. AWS Lambda Power Tuning is a good choice for new features that can help you choose between the two instruction set architectures Lambda offers.

AWS Compute Optimizer uses machine learning to recommend an optimal memory configuration based on historical data. Compute Optimizer requires the feature to have been called at least 50 times in the last 14 days to make a recommendation based on previous usage, so it is most effective when the feature is in production.

Lambda Power Tuning and Compute Optimizer help you allocate the right memory size for your function. Use this value to update your feature's configuration using the AWS management console or IaC.

This article includes sample code for the AWS Serverless Application Model (AWS SAM) to demonstrate how to implement optimizations. You can also implement the same changes using the AWS Cloud Development Kit (AWS CDK), Terraform, Serverless Framework, and other IaC tools.

YAML

Set realistic function timeout

Lambda functions are configured with a maximum time limit for each call to run, up to 15 minutes. Setting an appropriate timeout can be beneficial in reducing the cost of a Lambda-based application. Unhandled exceptions, blocking actions (for example, opening a network connection), slow dependencies, and other conditions can lead to longer-running functions or functions that run up to the configured timeout. Proper timeouts are the best protection against both slow and erroneous code. At some point, the work done by a function and the cost per millisecond of that work is wasted.

The authors recommend setting the timeout to less than 29 seconds for all synchronous calls or those where the calling object is waiting for a response. Longer timeouts are appropriate for asynchronous calls, but timeouts longer than 1 minute should be treated as an exception that needs to be reviewed and tested.

Using Graviton

Lambda offers two instruction set architectures in most AWS regions: x86 and arm64.

Choosing Graviton can save you money in two ways. First, your functions can run more efficiently with the Graviton2 architecture. Secondly, you can pay less for their runtime. Lambda functions supported by Graviton2 are designed to deliver up to 19 per cent better performance at 20 per cent less cost. Consider starting with Graviton when developing new Lambda functions, especially those that do not require natively compiled binaries.

If your function relies on natively compiled binaries or is packaged as a container image, you must rebuild to transition between arm64 and x86. Lambda layers may also contain dependencies targeting one architecture or the other. The authors encourage you to review the dependencies and test the functionality before changing the architecture. The AWS Lambda Power Tuning tool also allows you to compare the price and performance of arm64 and x86 at different memory settings.

You can modify the architecture configuration of your function in the console or your chosen IaC. For example, in AWS SAM:

YAML

Filtering of incoming events

Lambda is integrated with over 200 event sources, including Amazon SQS, Amazon Kinesis Data Streams, Amazon DynamoDB Streaming, Amazon Managed Streaming for Apache Kafka, and Amazon MQ. The Lambda service integrates with these event sources to retrieve messages and call functions as needed to process them.

Builders can configure filters to limit the events sent to your function when working with one of these event sources. This technique can significantly reduce the number of function calls depending on the number of events and the specifics of the filters. When event filtering is not used, a function must be called first to determine if an event should be processed before the actual work is done. Event filtering eliminates the need to perform this check upfront while reducing the number of calls.

For example, you may want the function triggered only when orders over $200 are found in a message in the Kinesis data stream. You can configure the event filtering pattern using the console or IaC, similarly to memory configuration.

To implement the Kinesis stream filter using AWS SAM:

YAML

If the event satisfies one of the event filters associated with the event source, Lambda sends the event to your function for processing. Otherwise, the event is discarded as successfully processed without a function call.

If you are building or running a Lambda function called by one of the previously mentioned event sources, reviewing the available filtering options is recommended. This technique does not require any changes to the Lambda function's code—even if the function performs a pre-processing check, the check still completes successfully with filtering implemented.

Read Filtering event sources for AWS Lambda functions to learn more about this.

Avoiding recursion

You may be familiar with programming a recursive function or function/procedure that calls itself. Although rare, customers sometimes inadvertently build recursion into their architecture, so the Lambda function keeps calling itself.

The most common recursion pattern occurs between Lambda and Amazon S3. Actions in an S3 tray can trigger a Lambda function, and recursion can happen when that Lambda function writes back to the same tray.

Consider a use case where the Lambda function generates thumbnail images uploaded by users. You configure the tray to trigger the thumbnail generation function when a new object is placed in the tray. What happens if the Lambda function writes a thumbnail to the same tray? The process starts again, and the Lambda function runs on the thumbnail image itself. This is recursive and can lead to an infinite loop condition.

While there are many ways to prevent this problem, the best practice is to use a second S3 tray to store the thumbnails. This approach minimizes changes to the architecture, as there is no need to change the notification settings or the primary S3 tray. Read the article Avoiding Recursive Invocation with Amazon S3 and AWS Lambda to learn more about other approaches.

If you encounter recursion in your architecture, set the Lambda reserved concurrency to zero to stop the function from running. Wait a few minutes to hours before raising the reserved concurrency limit. Since S3 events are asynchronous calls with automatic retries, recursion may remain visible until the problem is resolved or all events expire.

Applications

Lambda offers several techniques for minimizing infrastructure costs, whether you are just starting with Lambda or already have numerous features implemented in a production environment. Combined with lower initial development and ongoing maintenance costs, a serverless solution can offer a low total cost of ownership. Learn these techniques and more with a serverless optimization workshop.

To learn more about serverless architectures, find reusable patterns, and stay up to date, visit Serverless Land.