Migration of a microservices-based development environment to AWS for NeuroSYS

For NeuroSYS, we completed a project to migrate their microservices-based development environment to AWS cloud, based on the Kubernetes platform. Since the client had no previous resources related to the operation of applications in AWS and containers, the scope of our cooperation also included training to implement NeuroSYS employees, and the whole project was based on CI/CD.

NeuroSYS is a software house that creates dedicated IT solutions, especially in the area of web, mobile and artificial intelligence applications. One of the company's products is nsFlow - an integrated platform that enables designing and launching AR applications in order to optimize business processes.

Our cooperation with NeuroSYS began with an interview, during which we established the exact business and technological expectations of the client. The most important were: the possibility of flexible and dynamic adjustment of infrastructure size depending on the needs, the possibility of rapid deployment of multiple environments and infrastructure created in accordance with DevOps methodology and IaC approach, which will minimize the effort associated with the restoration and copying the environment (dev/test/stage and disaster recovery). In addition, an important aspect for the customer was to optimize costs and maintain good practices related to security principles of cloud systems.

Cloud platform and AWS accounts

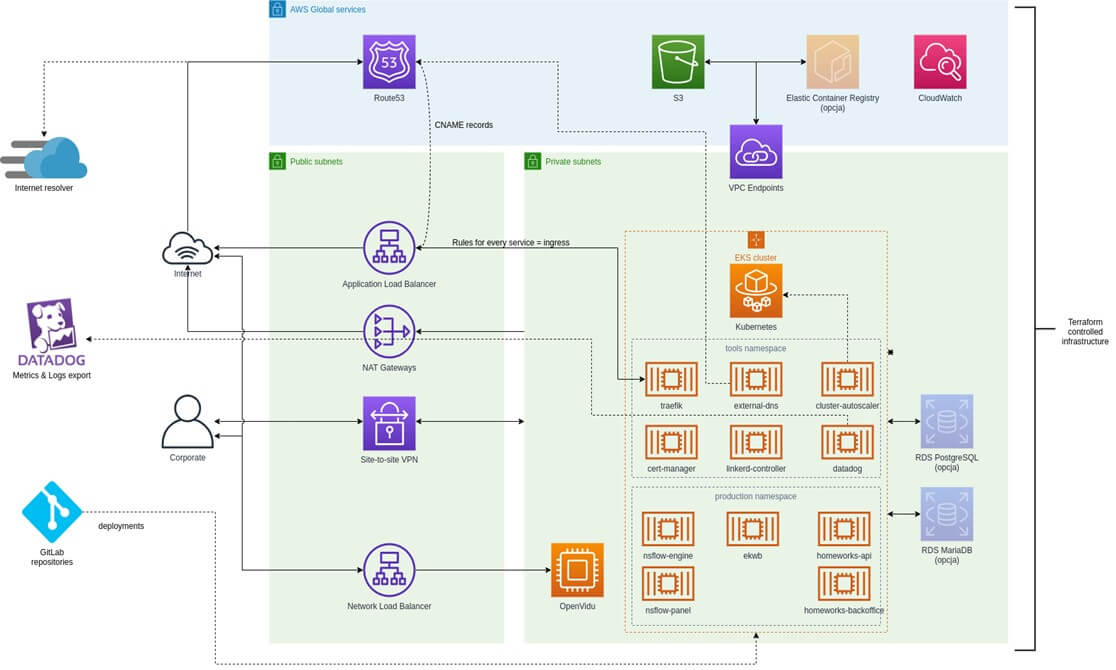

We defined and implemented the entire infrastructure using Terraform tool. The implementation code of the platform is maintained within the client's servers. On the side of the client was also to prepare a dedicated repository on GitLab and to give Hosters the rights to use and modify the code during the work. The full design of the cloud infrastructure is shown in the figure below.

AWS account was set up by the customer. Together, we determined the use of appropriate roles and chains of trust for the organization's respective accounts. The components whose configuration was left to Hostersi were the service accounts used by automation, the implementation of preserving information about AWS API access and usage (AWS CloudTrail), and the components used by IaC (AWS S3 bucket, Dynamo DB table) to preserve and protect the infrastructure.

VPC virtual network

Due to the complete separation of the production environment, we have created independent VPC networks for the application components and the services they use. The virtual networks are extended to a private area (private subnets), which means that they do not have direct access to the Internet. The traffic that comes out of them goes through NAT Gateways (NATs). Each area (private and public) contains three subnets, each located in a different Data Center (AZ).

Additionally, in order to create encrypted access to the private area of the cloud from the corporate office area (and only from that area), we deployed a site-to-site VPN tunnel in the private area.

Other solutions that we implemented in this area include dedicated connection of the private area to the S3 data warehouse, so that traffic runs through a designated network excluding the Internet, and disconnected IP addressing of each VPC network to facilitate VPN connections.

Kubernetes Platform

We prepared Kubernetes cluster using the Amazon Elastic Kubernetes Service, which, in addition to the advantages inherent to the Kubernetes platform, thanks to autoscaling enables the Customer to optimize cluster maintenance costs, support multiple environments consisting of decentralized microservices, self-repair of deployed applications and SLA with financial support for service performance at the level below 99.95%. What is very important for the Customer, Amazon EKS supports fast and frequent deployments of new applications.

Additionally, in order to ensure that the cluster functions in the way expected by NeuroSYS, we installed the following components to the cluster:

- Ingress-controller trafik to provide ingress support along with cost optimization in the form of a single NLB load balancer for all supported ingresses.

- Certificate controller cert-manager responsible for the implementation of dynamic SSL certificates, the component is to take over the role of Rancher lets-encrypt.

- Autoscaler cluster-autoscaler - enables automatic scaling of groups of machines together with rescheduling of subsets

- External-dns DNS entry updater - automates handling of host addresses defined in ingress.

- Backup-restore Velero - creates backup copies of all cluster elements and their data

- Service mesh Linkerd - performs automatic mTLS, traffic metrics and request success of individual services

All of the above tools were implemented using and under the control of Helm tool, which allows the customer to maintain the appropriate configuration values of individual tools for a given cluster in the code according to DevOps methodology, control changes and possible returns (roll-back) to previous configurations, and easily maintain and update the full configuration of a given tool.

Databases

While creating the database we took into account first of all the client's requirements related to compliance with currently accepted practices in the functioning and maintenance of software. Therefore, we have implemented postgres databases in the form of kubernetes pods, using EBS disks via PersistentVolumeClaim. This form follows the pattern of microservice architecture, in which individual microservices have their own database, and the team responsible for the microservice is also responsible for its maintenance and configuration. The use of such a database also allowed us to separate the hardware resources of respective databases belonging to the corresponding microservices.

RabbitMQ data buses

As with the databases, we have taken into account the requirements for compatibility with current practices in software operation and maintenance and have run RabbitMQ in the form of kubernetes pods using EBS disks via PersistentVolumeClaim. This form follows the microservices architecture pattern, where a given group of microservices has its own data bus, and a given team is also responsible for maintaining and configuring it. The advantage of this solution is separation at the level of use of hardware resources of respective data buses belonging to corresponding microservices groups. Importantly, the cost of the data bus in this case is a component of the cost of the Kubernetes cluster, while disk data protection is provided by the open source Velero tool.

Before migrating the above solution, we conducted stress and load tests to ensure that the tools used are ready for the planned migration.

Monitoring of EKS clusters

For monitoring EKS clusters and the rest of AWS services, we configured DataDog tool, which allows you to create a single monitoring dashboard (single panel of glass), significantly reducing the response time to failures and incident analysis. We also implemented DataDog agent in Kubernetes cluster, which allows the customer to independently assess the usefulness, functionality and costs associated with using DataDog.

WEB application deployment

We installed the application stack on the Kubernetes platform using the Helm package manager, which is currently the most widely used manager for managing applications in the Kubernetes environment. Features of Helm allow to use it in an automated way (pipelines), also allows to place new versions of applications, going back to previous versions or removing them in a standardized way, using application definitions or their whole set in the form of so-called charts, i.e. definitions of all components necessary in the cluster to run the application.

Other solutions

As a store of objects (static files, copies, archive logs, infrastructure status files) we used AWS S3. For data encryption in AWS services (EBS disks, AWS S3 storage, databases, etc.) in integration with native authentication and authorization methods, we used AWS KMS (Key Management Service). For storing dynamic parameters available to applications using AWS native IAM and KMS services, including encrypted secrets, initialization parameters, etc., we used the Systems Manager Parameter Store service, while for storing secrets, due to the automatic secret rotation functionality, we implemented Secret Manager.

Summary

For the NeuroSYS company we migrated nsFlow platform to AWS environment based on Kubernetes clusters. The whole environment was created in accordance with the IaC approach. Due to the fact that after the migration, the client planned to build a team dedicated to 24/7 support of the migrated application, the whole process was carried out in accordance with the CI/CD philosophy, so that the client's employees could implement the project on an ongoing basis. Moreover, all implemented elements of the code were supplemented with "read me" information, containing basic information concerning each applied solution. Thanks to this, the implementation process for the client's team went quickly and smoothly.

Read also:

- Migration of EduNect educational website to AWS

- Implementation and maintenance of cloud infrastructure in AWS for Displate

- Migration of DANONE applications and websites to Amazon Web Services

- Migrating MySQL database to Amazon Aurora for Landingi.com

- New AWS infrastructure for Omnipack using IaC